Citizen, the crime-awareness app with more than 10 million users across the United States, is under growing scrutiny for its use of artificial intelligence to generate crime alerts without human review before publication. According to a detailed report by 404 Media, the company has been pushing out AI-written notifications that contain factual errors, sensitive personal data, and graphic descriptions of incidents, all of which are visible to users before analysts are able to intervene. These revelations come at a critical moment for the company, which recently laid off unionized employees and entered a formal partnership with New York City, signaling that it is positioning itself as an even more integral player in public safety communications.

AI-Generated Alerts and Their Risks

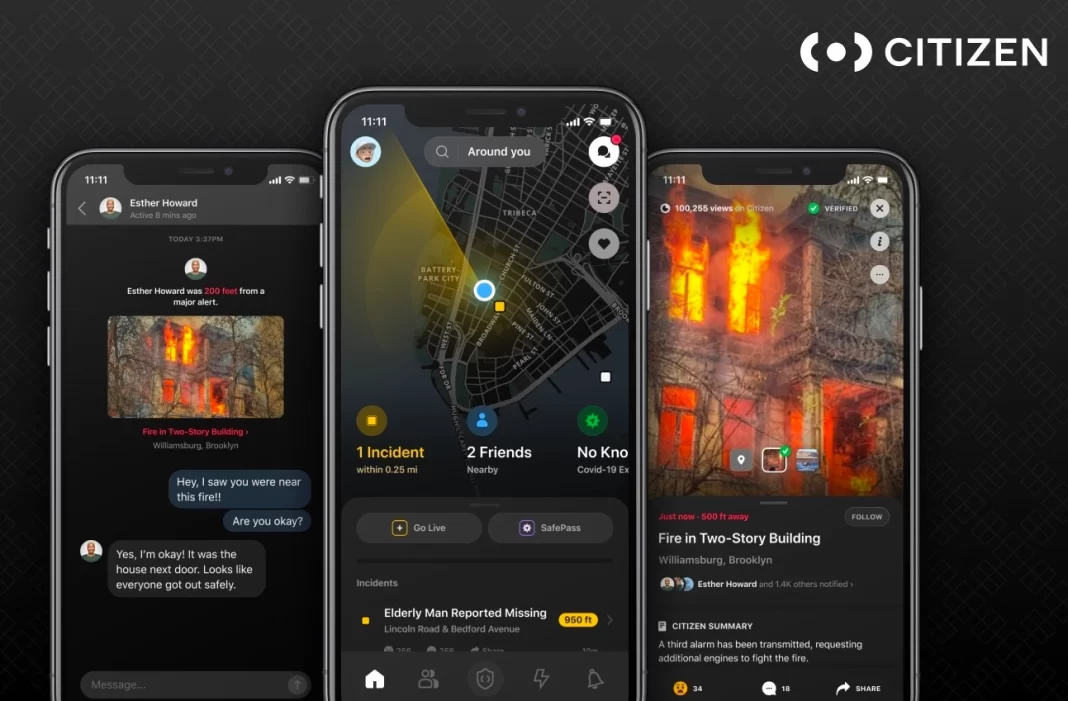

Traditionally, Citizen relied on human analysts to monitor police scanner traffic, verify details, and write alerts that would then be published to the app. These alerts, often accompanied by videos or photos, were designed to keep local communities informed about ongoing events such as fires, accidents, or violent crimes. In recent years, however, Citizen has introduced AI into this workflow, initially using it to help draft alerts before eventually allowing the system to automatically publish notifications without human oversight. Sources who spoke with 404 Media described this shift as one that greatly reduced the role of human analysts, leaving them to clean up mistakes after the fact rather than preventing them from being published in the first place.

The errors introduced by the AI ranged from mistranslations to dangerous misrepresentations. In one instance, it transformed “motor vehicle accident” into “murder vehicle accident,” a change that significantly altered the meaning of the report. Other alerts contained incorrect addresses, duplicating the same incident across multiple locations and giving users the impression that several unrelated events were unfolding simultaneously. In some cases, the AI included gory details such as “person shot in face” or exposed private information like license plate numbers, which violated Citizen’s own guidelines. These issues raised serious questions about whether the system could be trusted to provide accurate and safe updates on public events.

Beyond the factual problems, the timing and phrasing of the alerts also presented risks. According to sources, the AI sometimes framed incidents as though police officers were already on the scene and confirming details, when in reality dispatchers were simply relaying initial information. This kind of misrepresentation could mislead users into believing that law enforcement had already verified an event, potentially fueling unnecessary panic. In fast-moving cases like police chases, the AI was known to generate multiple clustered alerts for the same pursuit, further skewing perceptions of local crime levels. Analysts noted that some of these errors remained live on the app for extended periods, shaping public perception before corrections could be made.

Workforce Changes and Editorial Standards

Citizen’s increased reliance on AI coincided with a reduction in staff. Recently, the company laid off 13 unionized employees, several of whom had reportedly voiced concerns about the declining editorial standards associated with AI-generated alerts. Sources indicated that the layoffs were part of a larger shift that also included outsourcing tasks overseas, with contractors in Nepal and Kenya previously paid between $1.50 and $2 per hour to monitor police radios. These changes suggest that Citizen has been focusing more on cost-cutting and efficiency than on the accuracy and quality of its crime alerts, a move that has raised alarms among former staff and privacy advocates alike.

Former employees said the changes marked a stark departure from earlier practices, when analysts played a central role in filtering scanner traffic and crafting alerts with context and caution. With AI taking over the drafting and publishing process, human oversight was reduced to retroactive editing, which they described as a less effective safeguard. As one source told 404 Media, “AI sometimes just gets stuff horribly wrong and you scratch your head wondering how it got there.” These errors, they added, were not rare outliers but frequent enough to raise persistent concerns inside the company.

Several of the laid-off employees were described as being among the more outspoken critics of the AI rollout. They had reportedly challenged management on the risks of publishing unverified alerts and questioned the company’s apparent shift toward prioritizing quantity of notifications over quality. According to one source, “upper management was more focused and loved the look of more dots on the map and worried less about whether they were legitimate.” This strategy, while making the app appear more active, risked distorting public perceptions of crime and undermining trust in Citizen as a reliable tool.

Public Partnerships and Past Controversies

The controversy over AI-generated alerts comes as Citizen strengthens its partnerships with local governments. In June, New York City announced the launch of an official account on the app called “NYC Public Safety,” which is intended to provide residents with real-time alerts about emergencies ranging from extreme weather events to crimes in progress. In a press release, Mayor Eric Adams praised the partnership, saying that “a huge part of building a safer city is ensuring New Yorkers have the information they need to keep themselves and their loved ones safe.” By aligning with city leadership, Citizen is positioning itself not just as a crowdsourced alert platform, but as a formal communications channel for public safety.

This is not the first time Citizen has drawn criticism for how it handles sensitive information. During the 2021 Palisades fires in Los Angeles, company founder Andrew Frame offered a $30,000 bounty through the app for information leading to the capture of an alleged arsonist. The effort, which was widely criticized, turned out to target the wrong person, raising serious concerns about the dangers of crowdsourced investigations gone wrong. That incident highlighted the risks inherent in using Citizen as a tool for real-time accountability, especially when the information driving those alerts was inaccurate.

Critics argue that the move toward AI-driven alerts exacerbates these existing concerns by stripping away even the limited safeguards human analysts once provided. While AI is capable of processing large volumes of audio data quickly, its mistakes can spread misinformation at scale before anyone has the opportunity to intervene. The app’s popularity and its growing integration with government agencies amplify these risks, as millions of users could be misled by erroneous notifications. Citizen now faces the challenge of balancing speed and efficiency with the responsibility to provide accurate, reliable, and respectful information in a domain where mistakes can have real-world consequences.